OpenAI has officially introduced three new models: GPT-4.1, GPT-4.1 mini, and GPT-4.1 nano. These models come with a massive context capacity of up to 1 million tokens and a knowledge limit updated until June 2024.

The company says these models are superior to the recently updated GPT-4o and GPT-4o mini, which were released last July. GPT-4.1 is currently only available via API, so you won't be able to use it directly in ChatGPT yet.

OpenAI notes that GPT-4.1 will only be available via API. In ChatGPT, many improvements in instruction compliance, programming, and intelligence have been gradually built into the latest version of GPT-4o, and the company will continue to add more in future releases.

Benchmarks show the notable improvements that GPT-4.1 brings. The model scored 54.6% on SWE-bench Verified, a 21.4-point increase over GPT-4o. The model scored 38.3% on MultiChallenge, a guideline-based benchmark, and set a new record for long-video understanding with a score of 72.0% on the Video-MME benchmark, where models analyze videos up to an hour long without captions.

OpenAI has also collaborated with alpha partners to test the performance of GPT-4.1 in real-world use cases.

- Thomson Reuters tested GPT-4.1 with its legal AI assistant CoCounsel. Compared to GPT-4o, GPT-4.1 achieved a 17% increase in accuracy in multi-document evaluation. This type of work relies heavily on the ability to track context across multiple sources and identify complex relationships such as conflicting terms or hidden dependencies, and GPT-4.1 consistently demonstrated strong performance.

- Carlyle used GPT-4.1 to extract financial data from long, complex documents, including Excel and PDF files. According to the company’s internal benchmarks, the model performed 50 percent better than previous models at document retrieval. It was the first model to reliably handle problems such as finding a “needle in a haystack,” missing information in the middle of a document, and arguments that require connecting information across multiple files.

Performance is one thing, but speed is equally important. OpenAI says GPT-4.1 returns the first token in about 15 seconds when processing 128,000 tokens, and up to 30 seconds at a full million tokens. The GPT-4.1 mini and nano are even faster.

GPT-4.1 nano typically responds in under 5 seconds to prompts with 128,000 input tokens. Prompt caching can further reduce latency while saving costs.

Image understanding also made significant progress. In particular, GPT-4.1 mini outperformed GPT-4o on various visual benchmarks.

- On MMMU (including charts, diagrams, and maps), GPT-4.1 mini scored 73%. This is higher than GPT-4.5 and far exceeds the 56% of GPT-4o mini.

- On MathVista (which tests the ability to solve image problems), both GPT-4.1 and GPT-4.1 mini scored 57%, far surpassing the 37% of GPT-4o mini.

- On CharXiv-Reasoning , where models answer questions based on scientific graphs, GPT-4.1 continues to lead.

- On Video-MME (long videos without subtitles), GPT-4.1 achieved 72%, a significant improvement over GPT-4o's 65%.

About price:

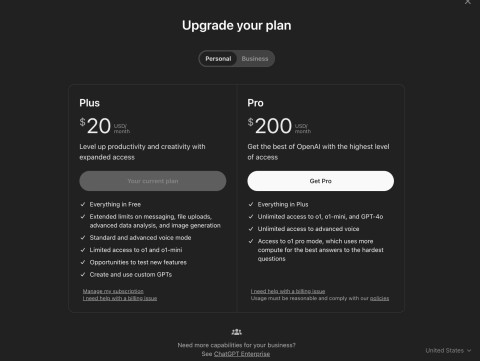

- GPT-4.1 costs $2 per 1 million tokens input and $8 for output.

- GPT-4.1 mini is priced at $0.40 for input and $1.60 for output.

- GPT-4.1 nano costs $0.10 input and $0.40 output.

Using prompt caching or the Batch API can further reduce these costs, which is great for large-scale applications. OpenAI is also preparing to deprecate support for GPT-4.5 Preview on July 14, 2025, citing GPT-4.1's better performance, lower latency, and lower costs.