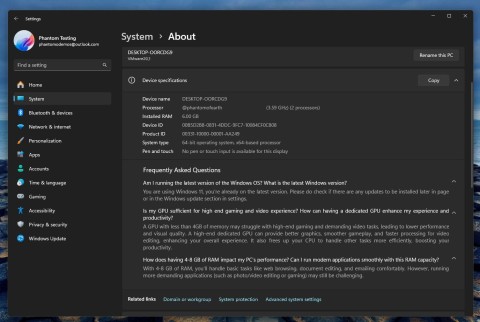

In January, Microsoft announced plans to bring NPU-optimized versions of the DeepSeek-R1 model directly to Copilot+ computers running on Qualcomm Snapdragon X processors. By February, DeepSeek-R1-Distill-Qwen-1.5B was first released in AIToolkit for VSCode.

Today, the Redmond company officially announced that it is making its refined DeepSeek R1 7B and 14B models available for the Copilot+ computing platform via Azure AI Foundry. The ability to run 7B and 14B models directly on the Copilot+ device will allow developers to build more powerful AI applications that were previously not possible.

Since these models run on the NPU, users can expect stable AI performance with little impact on battery life and thermal performance of the computer. Additionally, the CPU and GPU will be freed up to perform other more important tasks on the system.

Microsoft notes that it used Aqua, an in-house automatic quantization tool, to quantize all variants of the DeepSeek model down to int4. However, the model's token processing speed is quite low. Microsoft reports just 8 tokens/second for the 14B model and nearly 40 tokens/second for the 1.5B model. Microsoft says it is continuing to optimize for speed. As Microsoft continues to improve performance, the impact of these models on the Copilot+ machine is expected to increase significantly.

Interested developers can download and run the 1.5B, 7B, and 14B variants of the DeepSeek model on Copilot+ computers via the AI Toolkit extension for VS Code. The DeepSeek model is optimized in ONNX QDQ format and downloaded directly from Azure AI Foundry. These models will also be available on future Copilot+ computers running Intel Core Ultra 200V and AMD Ryzen processors.

Many have argued that Microsoft is taking a risk by partnering with DeepSeek. Integrating DeepSeek into the Windows ecosystem could get Microsoft into trouble if allegations of technology theft are proven. However, there is no denying that DeepSeek is making a big step forward in the AI market. This move by Microsoft represents a push toward more powerful on-device AI capabilities, opening up new possibilities for AI-powered applications.